Solving the Problem of Siloed Intelligence in the Age of AI With MCP

Why the MCP (Model Context Protocol) Could Be the Key to Interoperability Across Platforms, Data Portability, and Avoiding Vendor Lock-in

When we talk about AI, most people think in terms of applications — ChatGPT, Copilot, Gemini, Claude, etc. These stand-alone chat experiences have proven to be incredibly useful as assistants and sounding boards. But now, AI has become deeply integrated into the platforms we use to manage the data of our day-to-day work.

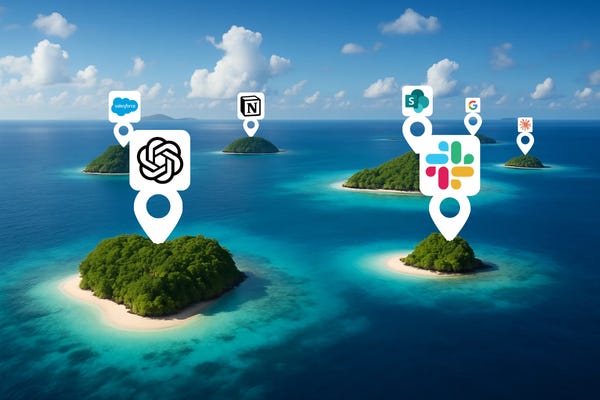

This shift is creating what I call siloes of intelligence — AI-powered, but locked away in contextual islands.

The problem is, humans rarely work in a single platform. The source and context of the data we want to apply AI to — the stuff we want to gain insight on and build operational value with — often lives across a variety of systems. Work documents in SharePoint, team chats in Slack, personal notes in Notion, and customer data in Salesforce — each with their own AI.

According to Okta’s 2024 Business at Work report, the average enterprise uses more than 200 different applications. Most don’t share data seamlessly, but nearly all have some on-board AI solution embedded. That’s a lot of disconnected intelligence.

This growing fragmentation is exactly why standards like MCP matter — to help bridge those gaps and make data context universally accessible.

What is MCP?

Model Context Protocol (MCP) is an open standard introduced by Anthropic in 2024 to make LLMs more practical, safe, and extensible. Although not yet universally adopted, MCP has quickly become one of the most prominent emerging standards for enabling interoperability across AI ecosystems.

Instead of treating AI like a black box, MCP formalizes how models communicate with data sources (APIs, databases, files) and tools (automation scripts, apps, workflows).

It separates the model from the context — ensuring that models aren’t reasoning in isolation, but with the right inputs.

In practice, MCP acts like a translation layer: the AI doesn’t need to know the specifics of your Salesforce API, your Notion database, or your Slack workspace. If an MCP server exists for it (more on that in a minute), the AI can interact seamlessly.

Making Context Universally Accessible

MCP isn’t about triggering actions, the way a Power Automate or Zapier workflow typically work. Instead, it standardizes context‑sharing. This gives any model the ability to tap into a variety of tools, data, and workflows without having to rebuild the model’s understanding of your data. This means your workflows have portability across a variety of LLMs. Whichever model you choose can tap into the same context to help you make better decisions, uncover insights, or optimize workflows.

MCP enables universal connectivity without retraining or building custom pipelines for every app. It’s the operating system layer that allows AI platforms to scale.

The Adoption Landscape

Logic and history both suggest that big vendors gain the most when they keep your data locked inside their ecosystems. Google, Microsoft, and even OpenAI have built their moat strategies around data, user experience and integration.

Yet, over the past year, MCP has seen significant industry-wide adoption — including from the very companies once thought to resist it.

Anthropic (Claude) → The originator of MCP and still its leading contributor. Anthropic continues to maintain the core standard and reference implementations.

OpenAI (ChatGPT) → In March 2025, OpenAI announced MCP integration across its products, including the Agents SDK and ChatGPT desktop app. Their developer documentation now includes official guidance for using MCP.

Microsoft (Copilot) → Microsoft partnered with Anthropic to release the official C# SDK for MCP, integrated MCP into Copilot Studio and the Semantic Kernel, and announced plans to embed MCP support directly into Windows 11 for secure agent interactions.

Google (Gemini) → Google’s Agent-to-Agent (A2A) protocol is designed to complement MCP. Google co-developed the official Go SDK and released a public MCP server for its Data Commons project to make datasets more accessible to AI agents.

Others (Mistral, Cohere, Llama) → Open-source developers and smaller vendors are adopting MCP for interoperability across open and proprietary systems.

The multi-platform, agentic-driven world has broken the lock-in model. It doesn’t scale anymore. Interoperability has suddenly become a competitive advantage.

And that has largely come from customer demand, open‑source pressure, and network effects. As developer ecosystems form around connectivity, vendors are worried they’ll be left out.

But don’t be fooled.

It’s not altruism that’s driving them to adopt MCP. In my opinion, lock-in is still the goal. Owning the experience, intelligence, and optimization layers on top of that open protocol enables them to keep you engaged in their platform.

Still, MCP is a rising tide that lifts all ships. And the key to all of this — are MCP servers.

What are MCP Servers?

If MCP is the protocol, MCP Servers are the gateway — bridging the gap with efficiency and universal connectivity.

For the average enterprise, 200 different applications means 200 different places for data to be warehoused, processed, and collaborated in. Each of those applications has its own architecture, permissions, and API — and replacing or integrating any one of them can cause a cascade of disruption.

MCP servers abstract away that complexity. Instead of rebuilding integrations for every system, an MCP server standardizes how models talk to them.

A server is like a pre-fabricated bridge that can be deployed anywhere, quickly, to connect the LLM and the resource with minimal alignment of permissions and structures.

It provides structured context — exposing tools, functions, and data to the AI in a standardized way.

Multiple servers can be combined, letting you build a constellation of connected systems.

More importantly, MCP Servers allow systems to easily swap out language models or data sources, based on the workload, demands, or evolving needs.

The benefits of MCP Servers in Practice:

Responding to a Company-Wide System Change: Imagine your company migrates from Google Workspace to Microsoft 365. Your AI assistant, which uses a Google MCP Server to summarize emails and schedule meetings, doesn’t need to be rebuilt. You simply swap in the Microsoft 365 MCP Server, and the AI continues its work without interruption. The core workflow is preserved, saving significant development time.

Upgrading Your Sales and Support Tools: Your startup initially used a Notion MCP Server as a simple CRM and knowledge base. As you grow, you upgrade to a dedicated Salesforce MCP Server for customer data and a Zendesk MCP Server for support tickets. Instead of building new integrations, you just point your AI to these new, more powerful servers. It can now pull customer history and support context using the same universal language it always has.

Connecting a Central Knowledge Hub: Your AI is configured to answer internal questions by connecting to company documents via a SharePoint MCP Server. If your research team keeps its most vital data in a separate Confluence space, you can simply add a Confluence MCP Server. Now, when you ask a question, the AI can draw context from both sources seamlessly to give you a complete answer.

Instead of scraping or guessing, the AI requests through MCP and receives structured responses it can reason over.

Practical Ways to Use MCP for a Personal AI Platform

To make this tangible, imagine how MCP could unify SharePoint, Slack, Notion, and Salesforce:

You draft a proposal in SharePoint.

Your team discusses it in Slack.

You’ve captured supporting research in Notion.

And your customer details live in Salesforce.

Normally, these stay siloed (unless you build custom connectors and workflows). With MCP, your AI assistant could pull context from all four at once: drafting an executive summary that references the SharePoint document, includes key Slack discussion points, cites Notion research, and tailors the pitch with Salesforce data.

This is the power of context-sharing. And beyond this example, there are many ways MCP can be applied:

Knowledge Hub — Connect notes (Notion, OneNote, Obsidian) and files (SharePoint, Google Drive) so your AI can draft with your personal knowledge.

Workflow Orchestrator — Link task managers like Planner or Asana, letting AI suggest priorities or draft updates.

Inbox + Calendar Assistant — Use MCP to summarize email threads, prep you for meetings, or draft responses.

Research & Monitoring — Plug into RSS feeds or APIs so your AI flags trends in real time.

Custom Agents — Build specialized AI roles (Chief of Staff, Researcher, Project Manager) all pulling from the same consistent context.

Why MCP Matters

MCP is more than a technical spec — it reframes AI adoption. As part of the Be AI Ready approach, MCP can play a critical part in future-proofing systems.

Reduced Vendor Lock-In: You are not trapped in a single vendor’s ecosystem (like Microsoft, Google, or OpenAI).

Interoperability: MCP allows you to connect and pull context from normally siloed systems like SharePoint, Slack, and Salesforce all at once.

Simplified Development: You avoid the duplicated effort of building and maintaining separate, custom integrations for every tool and every LLM.

Accelerated Innovation: It creates a common foundation that makes it easier for developers to build new tools and agents.

The organizations (and individuals) that win in the AI era won’t be the ones with the most tools — they’ll be the ones that build platforms that can tap into pools of context seamlessly and without restraint.

What if MCP Doesn’t Gain Traction?

It’s worth asking: what happens if MCP stalls and isn’t widely adopted?

Fragmented Ecosystems — Users will be forced to choose between vendor silos (Microsoft, Google, OpenAI), with little portability of data or workflows.

Duplicated Effort — Each LLM will require separate connectors, integrations, and governance, increasing cost and complexity.

Stifled Innovation — Smaller vendors and open-source players will struggle to compete without a standard to level the playing field.

In contrast, widespread adoption of MCP would:

Create interoperability by default, letting any AI tap into the same context.

Reduce lock-in and shift power back to users and organizations.

Accelerate innovation by making it easier for developers to build on top of a common foundation.

The future of AI may well depend on whether MCP (or a similar standard) becomes the connective tissue — or whether we accept a fractured landscape dominated by closed ecosystems.